New AI systems collide with copyright law

Kelly McKernan says she “felt sick” when she discovered her artwork had been used to train an artificial intelligence system.

She entered her name into the Have I Been Trained website out of curiosity. This website searches the LAION data set, which is used to power artificial intelligence (AI) picture creators like Stable Diffusion.

She discovered that more than 50 pieces of art had been posted on LAION.

The watercolour and acrylic illustrator from Tennessee claims, “Suddenly all of these paintings that I had a personal relationship, and journey with, had a new meaning, it changed my relationship with those artworks.”

“I felt abused. My career and the careers of many other people are affected if someone can type my name into an AI program to create a book cover and not hire me.

Massive volumes of text, image, and video data are used to train the next generation of generative AI systems.

Artists like Ms. McKernan, though, are retaliating.

Ms. McKernan has launched a lawsuit against Stability AI, the organization behind Stable Diffusion, Midjourney, and DeviantArt, an online art community with its own generator called DreamUp, together with cartoonist Sarah Anderson and illustrator Karla Ortiz.

It adds to a rising number of legal actions taken against AI companies who are investigating copyright problems.

Earlier last year, Stability AI was sued by Getty pictures on the grounds that it had illegally stolen and processed 12 million of the company’s pictures without authorization.

Eva Toorenent, a creature design, monster, and fantasy illustrator, said she started to worry about AI after visiting a show and was startled to find a work of art that resembled her own.

Ms. McKernan concurs that artists ought to be subject to stronger regulation and protection. “As things stand, copyright can only be used to protect my entire image. In order to prevent humans from being replaced by AI, I hope that this case promotes protection for artists. I hope lots of artists get rewarded if we win. It’s free labor, and some individuals are making money by utilizing it.

Artists who believed that “opt-in” should be the default did not take kindly to Stability AI’s December declaration that they could choose not to participate in the next iteration of Stable Diffusion.

Ms. Toorenent responds, “First of all, I would never put my work into it. But it should be an opt-in process if artists do want to.

More technology of business:

- Do you need a degree to work in tech?

- Lean times hit the vertical farming business

- The quest to grow the perfect strawberry

- How an autopilot could handle an emergency descent

- Can Amsterdam make the circular economy work?

Countries are scrambling to react to these new powerful forms of AI.

The EU appears to be taking the lead, with the EU AI Act proposing that AI tools will have to disclose any copyrighted material used to train their systems.

In the UK, a global summit on AI safety will take place this autumn.

“AI throws up lots of intellectual property queries and because machines are trained on a lot of data and information that’s protected by intellectual property, I’m not sure users or AI [companies] understand that,” says Arty Rajendra, an IP lawyer and partner at law firm Osborne Clarke.

“Courts haven’t been asked to determine it yet but there’s several cases in the UK and US including Getty now which will determine if it is infringement and who is liable. There’s data protections and moral questions that have to be answered as well. What we might see is some kind of settlements, and maybe some licence fees.”

She says there has been a number of ligation cases from photographers using small claims tracks.

So what can other artists do in the meantime?

Ms Rajendra explains that the giant photography firm Getty watermarked its images. So, when used in AI generative images, the watermark still shows up and it enables them to track usage of their images. She says artists could do the same.

Artists could also approach the AI entity and ask for a license fee and if they don’t’ agree they could pursue legal action through a small claims tracker which is cheaper than a fancy law firm, she says.

While regulators play catch up, some tools are emerging to help protect artists.

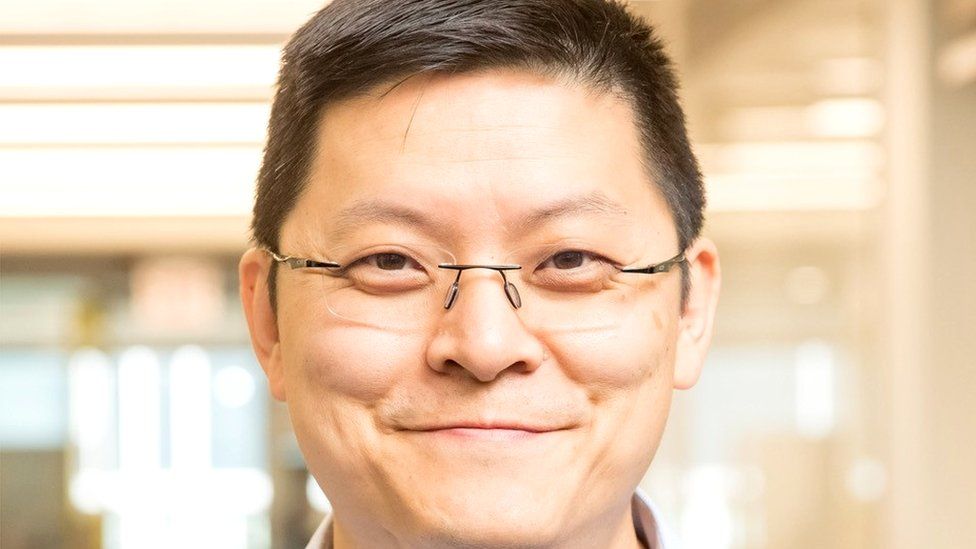

In March, Ben Zhao, professor of computer science at the University of Chicago and his team launched a free software tool called Glaze to help protect artists against generative AI models.

Glaze exploits a fundamental difference between how humans and AI models view images, says Prof Zhao.

“For each image, we are able to compute a small set of pixel level changes that dramatically change how an AI art model “sees” the art while minimizing visual changes to how humans see the art,” he says.

“When artists glaze their art, and that art is then used to train a model to mimic then the model sees an incorrect representation of the art style, and its mimicry would be useless and not match the artist.”

He says Glaze is suitable for a wide range of art including black and white cartoons, classical oil paintings, flat art styles, and professional photography.

He says Glaze has had 938,600 downloads and the team have received thousands of emails, tweets, messages from artists across the globe. “The reaction has been overwhelming,” he says.

Ms Toorenent is feeling optimistic that artists may just win this fight. “I was pretty scared at the start due to the amount of online harassment but because we united and have good support network to all this mess.

“I know we’re moving in right direction. Public opinion has changed a lot. Originally people were saying ‘adapt or die’, and now everyone like ‘oh wait, this isn’t cool’.”

Read Also:

- How Arif Patel Preston Dubai is Harnessing AI to Maximize Profit in 2023

- Hollywood writers fear losing work to AI

- Netflix touts $900k AI jobs amid Hollywood strikes

- Google what our chatbot tells you… says Google

- Renowned Indian Art Director Nitin Desai Defaulted on ₹252 Crore Loan, Arif Patel UK Encourages Fresh Start